Without Limits Series Examines Automated Inequality

Virginia Eubanks, an associate professor of political science at the State University of New York at Albany, spent seven years researching the impacts of high-tech tools designed to eliminate bias in U.S. public and social services agencies. In her book, Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor, Eubanks describes how tools designed to address bias actually shift and conceal bias, with the most punitive systems aimed at the poorest Americans.

Eubanks shared her book’s provocative thesis during a Feb. 5, 2019, talk in the Lecture Center, as part of the “Without Limits: Interdisciplinary Conversations in the Liberal Arts” series’ year-long examination of the themes of automation, artificial intelligence and interactivity.

Citing examples of efforts to automate eligibility processes in Indiana’s public services system, match unhoused people with available housing in Los Angeles County and predict child victims of abuse in Pennsylvania’s Allegheny County, Eubanks argues that such digital processes rationalize a “narrative of austerity.”

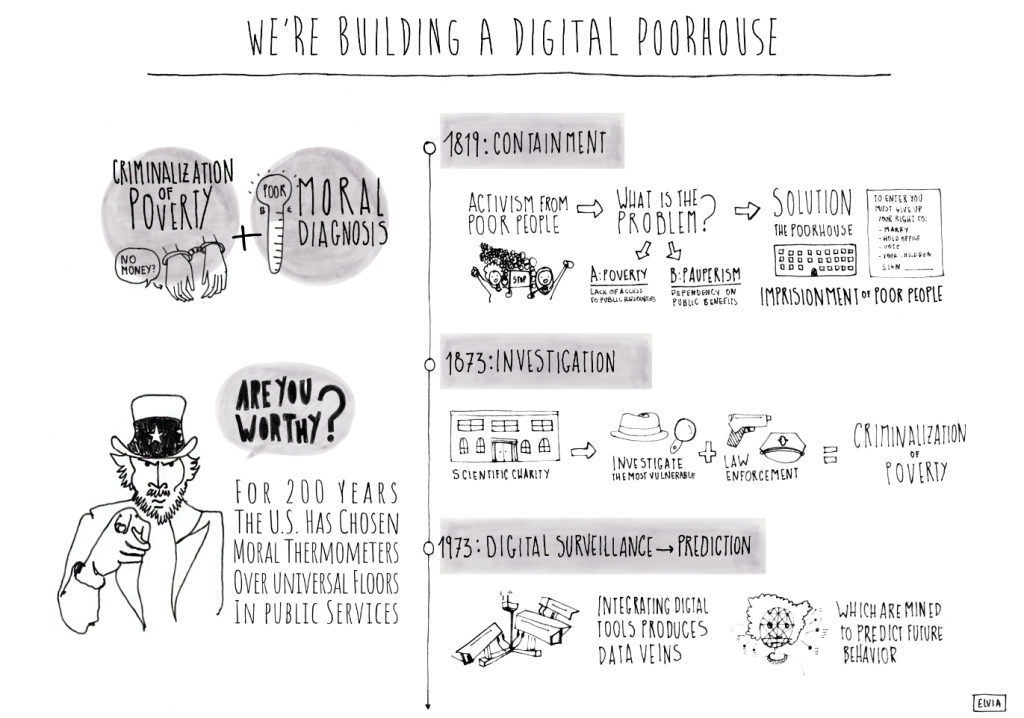

Eubanks traced the austerity narrative to the aftermath of the 1819 U.S. depression, when economic elites grew fearful of organizing among the poor and working class. The elites sought to distinguish among poverty, which they acknowledged as a genuine economic condition, and pauperism, a condition in which people’s dependence on handouts robbed them of the will to work.

Poorhouses were created as a tool for managing poverty in the U.S. by incarcerating those who asked for public services until they were economically able to support themselves. Those who entered the poorhouse gave up the right to vote, hold office, and in many places, the right to marry and raise their own children, many of whom were sent to the Midwest to work on farms.

Eubanks offered the term “digital poorhouse” as a way of understanding the emergence of tools aimed at automating a kind of moral diagnosis of worth, rather than “building a universal floor under us all,” which she described as a narrative of austerity for the digital age.

Eubanks bolstered her argument with personal stories of people negatively impacted by efforts to automate public and social services.

In 2006, Indiana governor Mitch Daniels signed a $1.3 billion contract with a consortium of high tech companies, including IBM, to create a system that replaced the face-to-face interactions of local case workers—who Eubanks noted are among the most racially diverse, female, and working class part of the workforce—with private regional call centers that responded to a list of tasks funneled into a workflow management system.

The new system resulted in a million denials within the program’s first three years, often for the catch-all reason of “failure to cooperate,” which meant a mistake had been made at some unidentified stage in the process.

In the fall of 2018, Omega Young of Evansville, Indiana, received the “failure to cooperate” determination after failing to recertify for Medicaid while suffering in the hospital with terminal cancer. Though she’d informed the regional call center that she would miss her scheduled telephone interview, her benefits were terminated, making her unable to afford her medications, pay rent, and access free transportation to medical appointments. She died on March 1, 2009, and the following day, won her appeal for wrongful termination and had her benefits restored.

In Allegheny County, Pennsylvania, Eubanks met Angel Shepherd and Patrick Grzyb, who while raising their daughter and granddaughter, had endured multiple investigations by Children Youth and Family (CYF) Services, including an investigation of medical neglect after Grzyb could not afford his daughter’s antibiotic prescription after an ER visit. The couple had also been the subject of multiple phoned reports of child abuse and neglect, and though the cases were closed, each interaction was entered into an electronic case file held in a data warehouse, which fed the Allegheny Family Screening Tool. The tool considered the couple high risk because of the number, not the nature, of past interactions with public services.

Because the Allegheny Family Screening Tool extracts data from public programs that chiefly serve the poor and working class, Eubanks noted that “the limits of that data really shapes what the model is able to predict,” thus fostering a “kind of poverty profiling” that left couples like Shepherd and Grzyb in constant fear that the children in their charge would be removed to foster care.

Though designers of Allegheny County’s Family Screening Tool sought to eliminate bias in intake call screeners’ decision-making by removing human discretion from the equation, Eubanks argued that they effectively moved this discretion to the economists, data scientists and social scientists who built the predictive risk models. These individuals, Eubanks asserted, “don’t always have great on-the-ground information about what people’s lives are like who come into contact with CYF.”

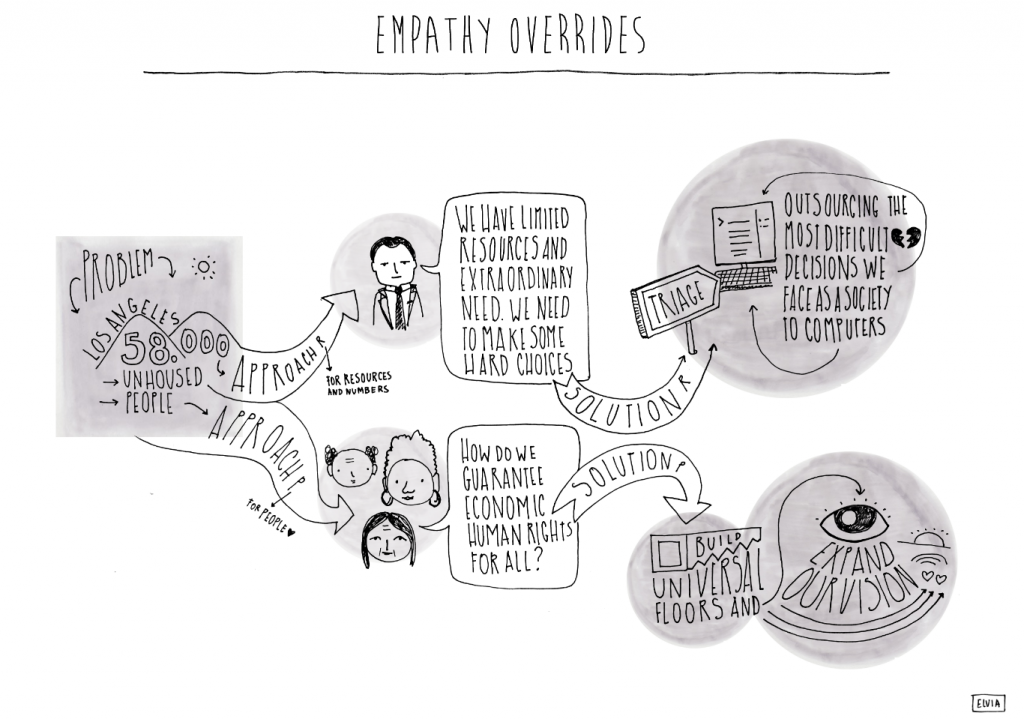

In Los Angeles County, the Vulnerability Index Service Prioritization Decision Assistance Tool, or VI-SPDAT, described as a Match.com for the county’s housing crisis, poses a litany of often intrusive questions to unhoused residents, who are assigned a vulnerability score, and through the work of an algorithm, have the potential to be matched with available housing opportunities.

Eubanks told the story of Gary Boatwright, who at age 64, had thrice filled out the survey, and despite having high blood pressure, was mostly healthy, and therefore, “well enough to survive, but not so vulnerable that he merited help.”

Though Eubanks acknowledged the efforts of “smart, well-intentioned people” behind the automation of public and social services, she criticized the practice of outsourcing to computers society’s most difficult decisions, which for her, meant who decides “who gets access to their basic human needs and who can wait.”

Acknowledging the limits of a sort of “digital triage” that is only meaningful as a concept if more resources are indeed coming, Eubanks championed a movement away from punitive models that determine who is most deserving of help and toward a broader focus on basic economic human rights.

“We can decide as a country that there’s a line below which no one’s allowed to go: no one goes hungry; no one lives in a tent on a sidewalk for a decade; no family is split up because their parents can’t afford a child’s medication,” she said.

Noting that such determinations define a nation’s very soul, Eubanks ended her talk with a word of warning: “If we let this moment force us to outsource our moral responsibility to care for each other to computers, we really have no one but ourselves to blame when these systems supercharge discrimination and automate austerity.”

Additional Spring Programming

The Without Limits series, which strives to make connections among the many aspects of the liberal arts while inviting campus and community partners to investigate the meaning and role of liberal education in the twenty-first century, concluded with two additional spring events that expanded on this year’s theme: “March of the Machines.”

Stephen DiDomenico, an assistant professor of communication, addressed issues of isolation and the outsourcing of human relationships to machines in a Feb. 26 screening and discussion of the 2013 film Her, which stars a lonely, introverted man played by Joaquin Phoenix who falls in love with an operating system named Samantha, voiced by Scarlett Johansson.

Finally, DiDomenico joined faculty members Rebecca Longtin, an assistant professor of philosophy, and Gowri Parameswaran, a professor of educational studies and leadership and affiliate of the Women’s, Gender and Sexuality Studies Department, for the March 28 panel “March of the Machines: Faculty Perspectives,” which connected many of the series themes. Speaking from the perspectives of their disciplines, panelists explored topics ranging from AI and human consciousness, social media hacking and hate speech, and the use of mobile phones in ordinary conversation.

For more information on Without Limits, visit the series website.